Config files

With docker we can make sure that our compute environment is reproducible, but that does not mean that all our experiments magically become reproducible. There are other factors that are important for creating reproducible experiments.

In this paper (highly recommended read) the authors tried to reproduce the results of 255 papers and tried to figure out which factors were significant to succeed. One of those factors was "Hyperparameters Specified" e.g. whether or not the authors of the paper had precisely specified the hyperparameters that were used to run the experiments. It should come as no surprise that this can be a determining factor for reproducibility. However it is not a given that hyperparameters are always well specified.

Configure experiments

There is really no way around it: deep learning contains a lot of hyperparameters. In general, a hyperparameter is any parameter that affects the learning process (e.g. the weights of a neural network are not hyperparameters because they are a consequence of the learning process). The problem with having many hyperparameters to control in your code is that if you are not careful and structure them it may be hard after running an experiment to figure out which hyperparameters were actually used. Lack of proper configuration management can cause serious problems with reliability, uptime, and the ability to scale a system.

One of the most basic ways of structuring hyperparameters is just to put them directly into your train.py script in

some object:

class my_hp:

batch_size: 64

lr: 128

other_hp: 12345

# easy access to them

dl = DataLoader(Dataset, batch_size=my_hp.batch_size)

the problem here is configuration is not easy. Each time you want to run a new experiment, you basically have to change the script. If you run the code multiple times without committing the changes in between then the exact hyperparameter configuration for some experiments may be lost. Alright, with this in mind you change strategy to use an argument parser e.g. run experiments like this:

This at least solves the problem with configurability. However, we again can end up losing experiments if we are not careful.

What we really want is some way to easily configure our experiments where the hyperparameters are systematically saved

with the experiment. For this we turn our attention to Hydra, a configuration tool that is based

around writing config files to keep track of hyperparameters. Hydra operates on top of

OmegaConf which is a yaml based hierarchical configuration system.

A simple yaml configuration file could look like

with the corresponding Python code for loading the file

from omegaconf import OmegaConf

# loading

config = OmegaConf.load('config.yaml')

# accessing in two different ways

dl = DataLoader(dataset, batch_size=config.hyperparameters.batch_size)

optimizer = torch.optim.Adam(model.parameters(), lr=config['hyperparameters']['learning_rate'])

or using hydra for loading the configuration

import hydra

@hydra.main(config_name="config.yaml")

def main(cfg):

print(cfg.hyperparameters.batch_size, cfg.hyperparameters.learning_rate)

if __name__ == "__main__":

main()

The idea behind refactoring our hyperparameters into .yaml files is that we disentangle the model configuration from

the model. In this way it is easier to do version control of the configuration because we have it in a separate file.

❔ Exercises

The main idea behind the exercises is to take a single script (that we provide) and use Hydra to make sure that everything gets correctly logged such that you would be able to exactly report to others how each experiment was configured. In the provided script, the hyperparameters are hardcoded into the code and your job will be to separate them out into a configuration file.

Note that we provide a solution (in the vae_solution folder) that can help you get through the exercise, but try to

look online for your answers before looking at the solution. Remember: it's not about the result; it's about the journey.

-

Start by installing hydra.

Remember to add it to your

requirements.txtfile. -

Next, take a look at the

vae_mnist.pyandmodel.pyfiles and understand what is going on. It is a model we will revisit during the course. -

Identify the key hyperparameters of the script. Some of them should be easy to find, but at least three have made it into the core part of the code. One essential hyperparameter is also not included in the script but is needed for the code to be completely reproducible (HINT: the weights of any neural network are initialized at random).

Solution

From the top of the file

batch_size,x_dim,hidden_dimcan be found as hyperparameters. Looking through the code it can be seen that thelatent_dimof the encoder and decoder,lrfor the optimzer, andepochsin the training loop are also hyperparameters. Finally, theseedis not included in the script but is needed to make the script fully reproducible, e.g.torch.manual_seed(seed). -

Write a configuration file

config.yamlwhere you write down the hyperparameters that you have found. -

Get the script running by loading the configuration file inside your script (using hydra) that incorporates the hyperparameters into the script. Note: you should only edit the

vae_mnist.pyfile and not themodel.pyfile. -

Run the script.

-

By default hydra will write the results to an

outputsfolder, with a sub-folder for the day the experiment was run and further the time it was started. Inspect your run by going over each file that hydra has generated and check that the information has been logged. Can you find the hyperparameters? -

Hydra also allows for dynamically changing and adding parameters on the fly from the command-line:

-

Try changing one parameter from the command-line.

-

Try adding one parameter from the command-line.

-

-

By default the file

vae_mnist.logshould be empty, meaning that whatever you printed to the terminal did not get picked up by Hydra. This is due to Hydra under the hood making use of the native python logging package. This means that to also save all printed output from the script we need to convert all calls toprintwithlog.info-

Create a logger in the script.

-

Replace all calls to

printwith calls tolog.info. -

Try re-running the script and make sure that the output printed to the terminal also gets saved to the

vae_mnist.logfile.

-

-

Make sure that your script is fully reproducible. To check this you will need two runs of the script to compare. Then run the

reproducibility_tester.pyscript asthe script will go over trained weights to see if they match and that the hyperparameters are the same. Note: for the script to work, the weights should be saved to a file called

trained_model.pt(this is the default of thevae_mnist.pyscript, so only relevant if you have changed the saving of the weights). -

Make a new experiment using a new configuration file where you have changed a hyperparameter of your own choice. You are not allowed to change the configuration file in the script but should instead be able to provide it as an argument when launching the script e.g. something like

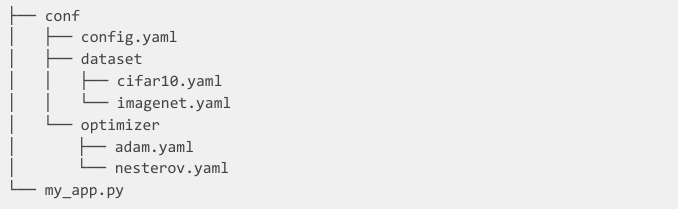

We recommend that you use a file structure like this

-

Finally, an awesome feature of hydra is the instantiate feature. This allows you to define a configuration file that can be used to directly instantiate objects in python. Try to create a configuration file that can be used to instantiate the

Adamoptimizer in thevae_mnist.pyscript.Solution

The configuration file could look like this

and the python code to load the configuration file and instantiate the optimizer could look like this

import os import hydra import torch.optim as optim @hydra.main(config_name="adam.yaml", config_path=f"{os.getcwd()}/configs") def main(cfg): model = ... # define the model we want to optimize # the first argument of any optimize is the parameters to optimize # we add those dynamically when we instantiate the optimizer optimizer = hydra.utils.instantiate(cfg.optimizer, params=model.parameters()) print(optimizer) if __name__ == "__main__": main()This will print the optimizer object that is created from the configuration file.

Final exercise

Make your MNIST code reproducible! Apply what you have just done to the simple script to your MNIST code. The only

requirement is that you this time use multiple configuration files, meaning that you should have at least one

model_conf.yaml file and a training_conf.yaml file that separates out the hyperparameters that have to do with

the model definition and those that have to do with the training. You can also choose to work with even more complex

config setups: in the image below the configuration has two layers such that we individually can specify

hyperparameters belonging to a specific model architecture and hyperparameters for each individual optimizer

that we may try.