Data drifting

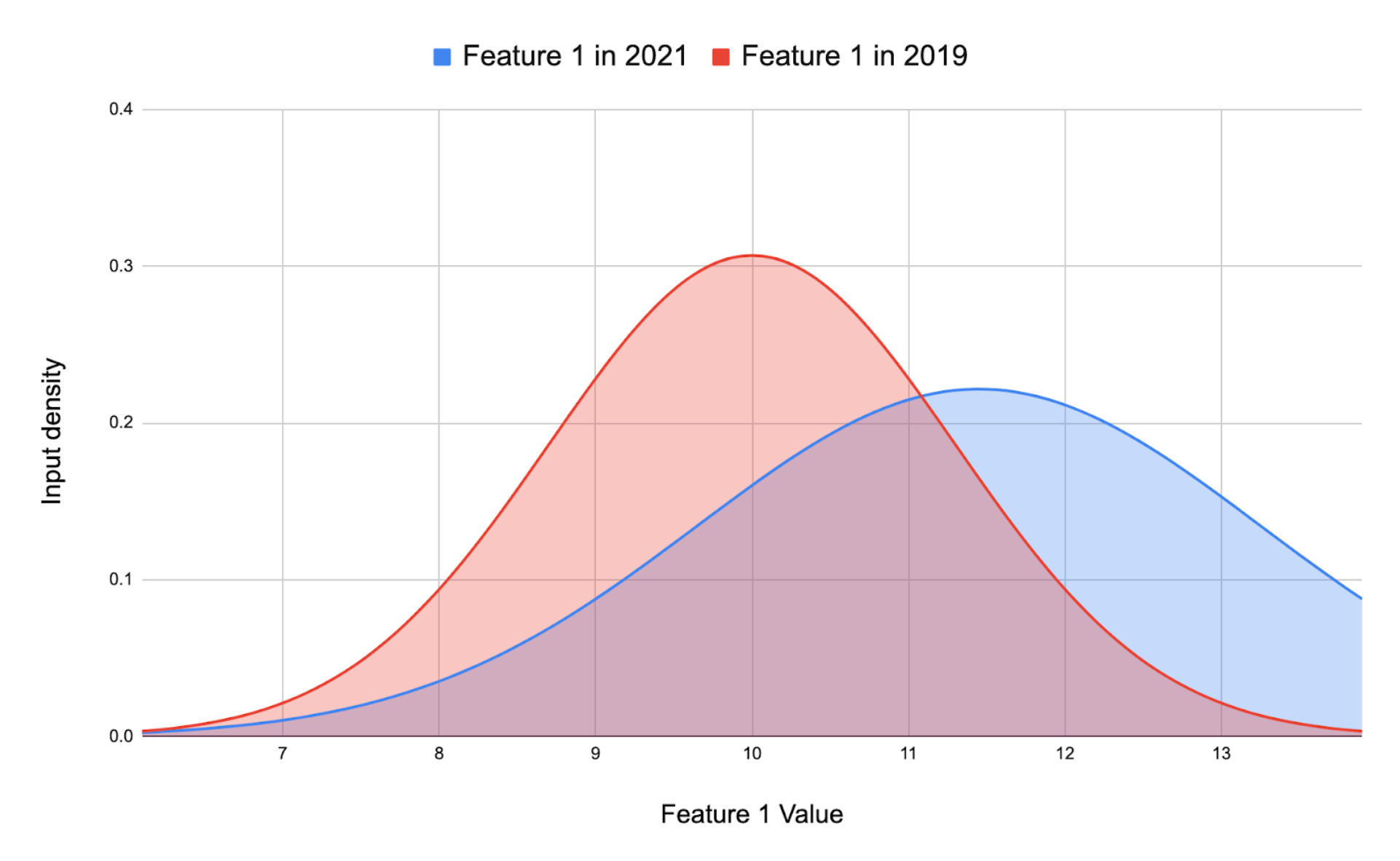

Data drifting is one of the core reasons for model accuracy degrades over time in production. For machine learning models, data drift is the change in model input data that leads to model performance degradation. In practical terms, this means that the model is receiving input that is outside of the scope that it was trained on, as seen in the figure below. This shows that the underlying distribution of a particular feature has slowly been increasing in value over two years

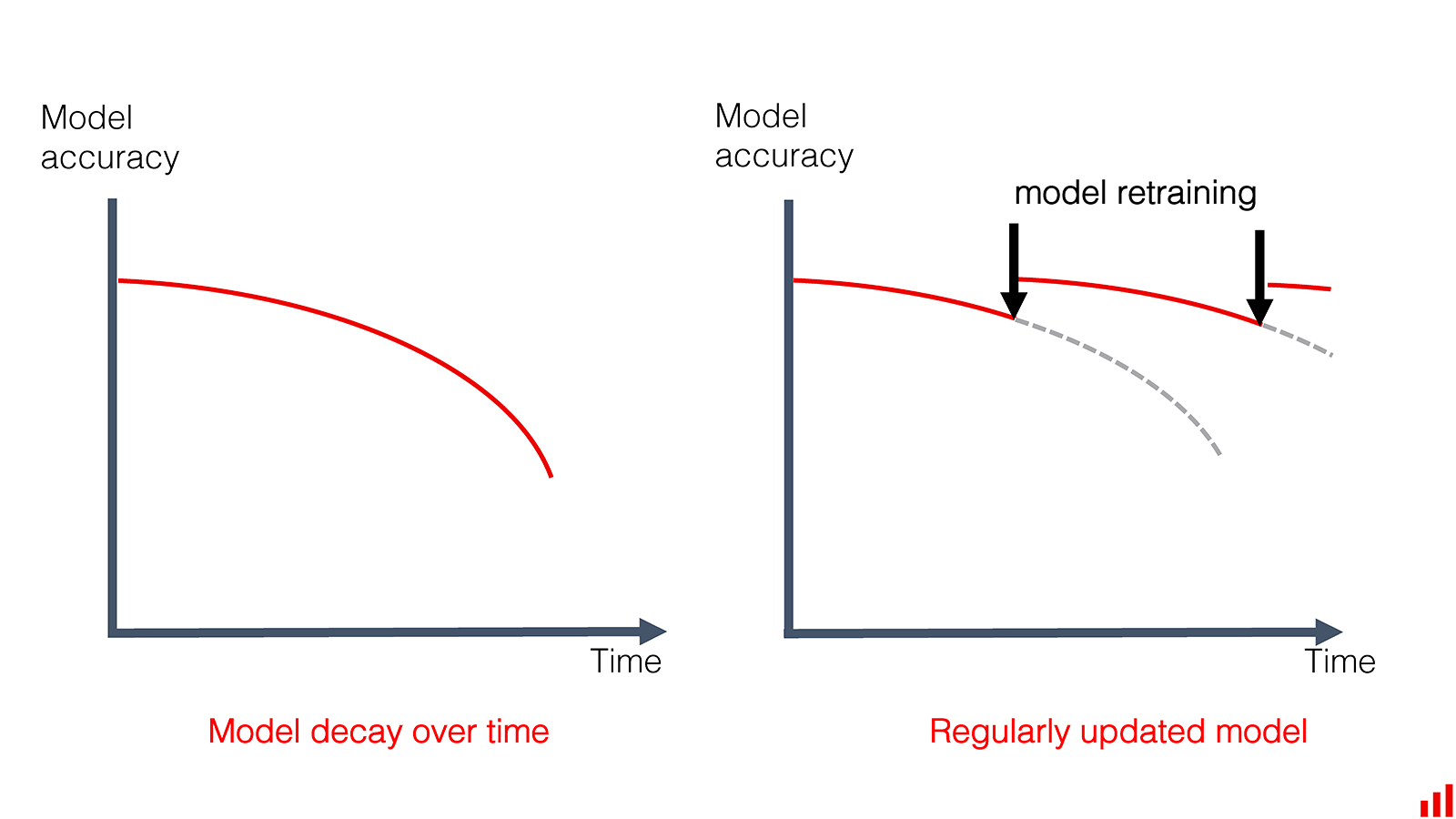

In some cases, it may be that if you normalize some feature in a better way that you are able to generalize your model better, but this is not always the case. The reason for such a drift is commonly some external factor that you essentially have no control over. That really only leaves you with one option: retrain your model on the newly received input features and deploy that model to production. This process is probably going to repeat over the lifetime of your application if you want to keep it up-to-date with the real world.

We have now come up with a solution to the data drift problem, but there is one important detail that we have not taken care of: When we should actually trigger the retraining? We do not want to wait around for our model performance to degrade, thus we need tools that can detect when we are seeing a drift in our data.

❔ Exercises

For these exercises we are going to use the framework Evidently developed by EvidentlyAI. Evidently currently supports both detection for both regression and classification models. The exercises are in large taken from here and in general we recommend if you are in doubt about an exercise to look at the docs for API and examples (their documentation can be a bit lacking sometimes, so you may also have to dive into the source code).

Additionally, we want to stress that data drift detection, concept drift detection etc. is still an active field of research and therefore exist multiple frameworks for doing this kind of detection. In addition to Evidently, we can also mention NannyML, WhyLogs and deepcheck.

-

Start by install Evidently

you will also need

scikit-learnandpandasinstalled if you do not already have it. -

Hopefully you already gone through session S7 on deployment. As part of the deployment to GCP functions you should have developed a application that can classify the iris dataset, based on a model trained by this script . We are going to convert this into a FastAPI application for the purpose here:

-

Convert your GCP function into a FastAPI application. The appropriate

curlcommand should look something like this:curl -X 'POST' \ 'http://127.0.0.1:8000/iris_v1/?sepal_length=1.0&sepal_width=1.0&petal_length=1.0&petal_width=1.0' \ -H 'accept: application/json' \ -d ''and the response body should look like this:

We have implemented a solution in this file (called v1) if you need help.

-

Next we are going to add some functionality to our application. We need to add that the input for the user is saved to a database whenever our application is called. However, to not slow down the response to our user we want to implement this as an background task. A background task is a function that should be executed after the user have got their response. Implement a background task that save the user input to a database implemented as a simple

.csvfile. You can read more about background tasks here. The header of the database should look something like this:time, sepal_length, sepal_width, petal_length, petal_width, prediction 2022-12-28 17:24:34.045649, 1.0, 1.0, 1.0, 1.0, 1 2022-12-28 17:24:44.026432, 2.0, 2.0, 2.0, 2.0, 1 ...thus both input, timestamp and predicted value should be saved. We have implemented a solution in this file (called v2) if you need help.

-

Call you API a number of times to generate some dummy data in the database.

-

-

Create a new

data_drift.pyfile where we are going to implement the data drifting detection and reporting. Start by adding both the real iris data and your generated dummy data as pandas dataframes.import pandas as pd from sklearn import datasets reference_data = datasets.load_iris(as_frame=True).frame current_data = pd.read_csv('prediction_database.csv')if done correctly you will most likely end up with two dataframes that look like

# reference_data sepal length (cm) sepal width (cm) petal length (cm) petal width (cm) target 0 5.1 3.5 1.4 0.2 0 1 4.9 3.0 1.4 0.2 0 ... 148 6.2 3.4 5.4 2.3 2 149 5.9 3.0 5.1 1.8 2 [150 rows x 5 columns] # current_data time sepal_length sepal_width petal_length petal_width prediction 2022-12-28 17:24:34.045649 1.0 1.0 1.0 1.0 1 ... 2022-12-28 17:24:34.045649 1.0 1.0 1.0 1.0 1 [10 rows x 5 columns]Standardize the dataframes such that they have the same column names and drop the time column from the

current_datadataframe. -

We are now ready to generate some reports about data drifting:

-

Try executing the following code:

from evidently.report import Report from evidently.metric_preset import DataDriftPreset report = Report(metrics=[DataDriftPreset()]) report.run(reference_data=reference, current_data=current) report.save_html('report.html')and open the generated

.htmlpage. What does it say about your data? Have it drifted? Make sure to poke around to understand what the different plots are actually showing. -

Data drifting is not the only kind of reporting evidently can make. We can also get reports on the data quality. Try first adding a few

Nanvalues to your reference data. Secondly, try changing the report tofrom evidently.metric_preset import DataDriftPreset, DataQualityPreset report = Report(metrics=[DataDriftPreset(), DataQualityPreset()])and re-run the report. Checkout the newly generated report. Again go over the generated plots and make sure that it picked up on the missing values you just added.

-

The final report present we will look at is the

TargetDriftPreset. Target drift means that our model is over/under predicting certain classes e.g. or general terms the distribution of predicted values differs from the ground true distribution of targets. Try adding theTargetDriftPresetto theReportclass and re-run the analysis and inspect the result. Have your targets drifted?

-

-

Evidently reports are meant for debugging, exploration and reporting of results. However, as we stated in the beginning, what we are actually interested in methods automatically detecting when we are beginning to drift. For this we will need to look at Test and TestSuites:

-

Lets start with a simple test that checks if there are any missing values in our dataset:

from evidently.test_suite import TestSuite from evidently.tests import TestNumberOfMissingValues data_test = TestSuite(tests=[TestNumberOfMissingValues()]) data_test.run(reference_data=reference, current_data=current)again we could run

data_test.save_htmlto get a nice view of the results (feel free to try it out) but additionally we can also calldata_test.as_dict()method that will give a dict with the test results. What dictionary key contains the if all tests have passed or not? -

Take a look at this colab notebook that contains all tests implemented in Evidently. Pick 5 tests of your choice, where at least 1 fails by default and implement them as a

TestSuite. Then try changing the arguments of the test so they better fit your usecase and get them all passing.

-

-

(Optional) When doing monitoring in practice, we are not always interested in running on all data collected from our API maybe only the last

Nentries or maybe just from the last hour of observations. Since we are already logging the timestamps of when our API is called we can use that for filtering. Implement a simple filter that either takes an integernand returns the lastnentries in our database or some datetimetthat filters away observations earlier than this. -

Evidently by default only supports structured data e.g. tabular data (so does nearly every other framework). Thus, the question then becomes how we can extend unstructured data such as images or text? The solution is to extract structured features from the data which we then can run the analysis on.

-

(Optional) For images the simple solution would be to flatten the images and consider each pixel a feature, however this does not work in practice because changes in the individual pixels does not really tell anything about the image. Instead we should derive some feature such as:

- Average brightness

- Contrast of image

- Image sharpness

- ...

These are all numbers that can make up a feature vector for a image. Try out doing this yourself, for example by extracting such features from MNIST and FashionMNIST datasets, and check if you can detect a drift between the two sets.

-

(Optional) For text a common approach is to extra some higher level embedding such as the very classical GLOVE embedding. Try following this tutorial to understand how drift detection is done on text.

-

Lets instead take a deep learning based approach to doing this. Lets consider the CLIP model, which is normally used to do image captioning. For our purpose this is perfect because we can use the model to get abstract feature embeddings for both images and text:

from PIL import Image import requests # requires transformers package: pip install transformers from transformers import CLIPProcessor, CLIPModel model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32") processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32") url = "http://images.cocodataset.org/val2017/000000039769.jpg" image = Image.open(requests.get(url, stream=True).raw) # set either text=None or images=None when only the other is needed inputs = processor(text=["a photo of a cat", "a photo of a dog"], images=image, return_tensors="pt", padding=True) img_features = model.get_image_features(inputs['pixel_values']) text_features = model.get_text_features(inputs['input_ids'], inputs['attention_mask'])Both

img_featuresandtext_featuresare in this case a(512,)abstract feature embedding, that should be able to tell us something about our data distribution. Try using this method to extract features on two different datasets like CIFAR10 and SVHN if you want to work with vision or IMDB movie review and Amazon review for text. After extracting the features try running some of the data distribution testing you just learned about.

-

-

(Optional) If we have multiple applications and want to run monitoring for each application we often want also the monitoring to be a deployed application (that only we can access). Implement a

/monitoring/endpoint that does all the reporting we just went through such that you have two endpoints:http://127.0.0.1:8000/iris_infer/?sepal_length=1.0&sepal_width=1.0&petal_length=1.0&petal_width=1.0 # user endpoint http://127.0.0.1:8000/iris_monitoring/ # monitoring endpointOur monitoring endpoint should return a HTML page either showing an Evidently report or test suit. Try implementing this endpoint. We have implemented a solution in this file if you need help with how to return an HTML page from a FastAPI application.

-

As an final exercise, we recommend that you try implementing this to run directly in the cloud. You will need to implement this in a container e.g. GCP Run service because the data gathering from the endpoint should still be implemented as an background task. For this to work you will need to change the following:

-

Instead of saving the input to a local file you should either store it in GCP bucket or an BigQuery SQL table (this is a better solution, but also out-of-scope for this course)

-

You can either run the data analysis locally by just pulling from cloud storage predictions and training data or alternatively you can deploy this as its own endpoint that can be invoked. For the latter option we recommend that this should require authentication.

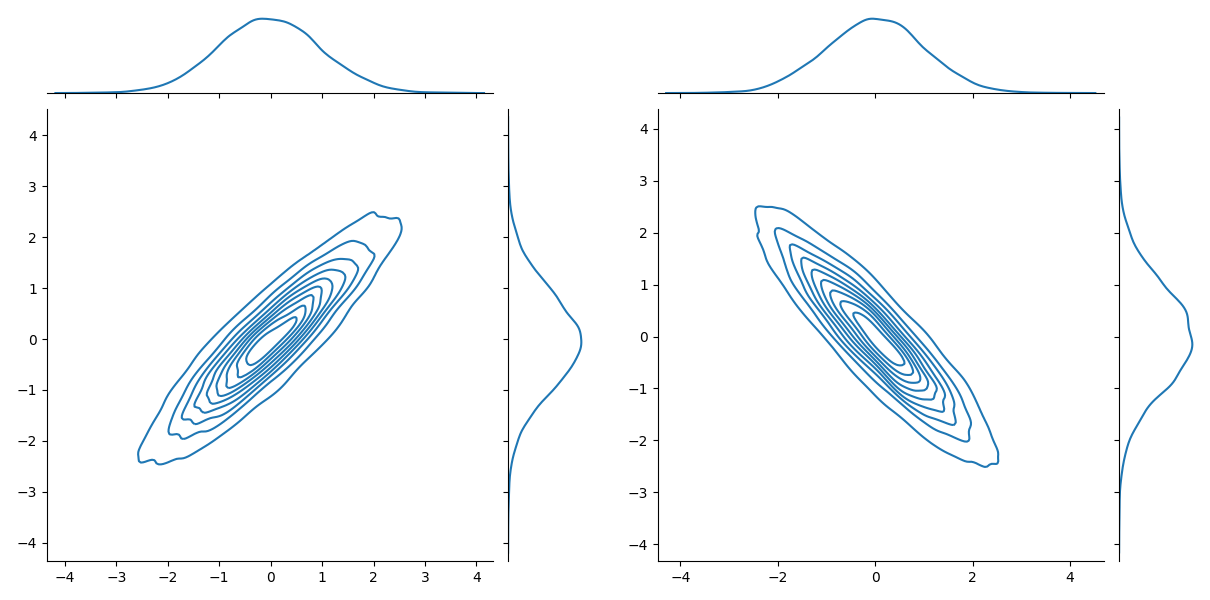

That ends the module on detection of data drifting, data quality etc. If this has not already been made clear, monitoring of machine learning applications is an extremely hard discipline because it is not a clear cut when we should actually respond to feature beginning to drift and when it is probably fine. That comes down to the individual application what kind of rules that should be implemented. Additionally, the tools presented here are also in no way complete and are especially limited in one way: they are only considering the marginal distribution of data. Every analysis that we done have been on the distribution per feature (the marginal distribution), however as the image below show it is possible for data to have drifted to another distribution with the marginal being approximately the same.

There are methods such as Maximum Mean Discrepancy (MMD) tests that are able to do testing on multivariate distributions, which you are free to dive into. In this course we will just always recommend to consider multiple features when doing decision regarding your deployed applications.