Monitoring

In this module we are going to look into more classical monitoring of applications. The key concept we are often working with here is called telemetry. Telemetry in general refer to any automatic measurement and wireless transmission of data from our application. It could be numbers such as:

- The number of requests are our application receiving per minute/hour/day. This number is of interest because it is directly proportional to the running cost of application.

- The amount of time (on average) our application runs per request. The number is of interest because it most likely is the core contributor to the latency that our users are experience (which we want to be low).

- ...

In general there are three different kinds of telemetry we are interested in:

| Name | Description | Example | Purpose |

|---|---|---|---|

| Metrics | Metrics are quantitative measurements of the system. They are usually numbers that are aggregated over a period of time. E.g. the number of requests per minute. | The number of requests per minute. | Metrics are used to get an overview of the system. They are often used to create dashboards that can be used to get an overview of the system. |

| Logs | Logs are textual or structured records generated by applications. They provide a detailed account of events, errors, warnings, and informational messages that occur during the operation of the system. | System logs, error logs | Logs are essential for diagnosing issues, debugging, and auditing. They provide a detailed history of what happened in a system, making it easier to trace the root cause of problems and track the behavior of components over time. |

| Traces | Traces are detailed records of specific transactions or events as they move through a system. A trace typically includes information about the sequence of operations, timing, and dependencies between different components. | Distributed tracing in microservices architecture | Traces help in understanding the flow of a request or a transaction across different components. They are valuable for identifying bottlenecks, understanding latency, and troubleshooting issues related to the flow of data or control. |

We are mainly going to focus in this module on metrics.

Local instrumentator

Before we look into the cloud lets at least conceptually understand how a given instance of a app can expose values that we may be interested in monitoring.

The standard framework for exposing metrics is called prometheus. Prometheus is a time series

database that is designed to store metrics. It is also designed to be very easy to instrument applications with and it

is designed to scale to large amounts of data. The way prometheus works is that it exposes a /metrics endpoint that

can be queried to get the current state of the metrics. The metrics are exposed in a format called prometheus text

format.

❔ Exercises

-

Start by installing

prometheus-fastapi-instrumentatorin pythonthis will allow us to easily instrument our FastAPI application with prometheus.

-

Create a simple FastAPI application in a file called

app.py. You can reuse any application from the previous module on APIs. To that file now add the following code:from prometheus_fastapi_instrumentator import Instrumentator # your app code here Instrumentator().instrument(app).expose(app)This will instrument your application with prometheus and expose the metrics on the

/metricsendpoint. -

Run the app using

uvicornserver. Make sure that the app exposes the endpoints you expect it too exposes, but make sure you also checkout the/metricsendpoint. -

The metric endpoint exposes multiple

/metrics. Metrics always looks like this:e.g. it is essentially a ditionary of key-value pairs with the added functionality of a

<type>. Look at this page over the different types prometheus metrics can have and try to understand the different metrics being exposed. -

Look at the documentation for the

prometheus-fastapi-instrumentatorand try to add at least one more metric to your application. Rerun the application and confirm that the new metric is being exposed.

Cloud monitoring

Any cloud system with respect for itself will have some kind of monitoring system. GCP has a

service called Monitoring that is designed to monitor all the different services.

By default it will monitor a lot of metrics out-of-box. However, the question is if we want to monitor more than the

default metrics. The complexity that comes with doing monitoring in the cloud is that we need more than one container.

We at least need one container actually running the application that is also exposing the /metrics endpoint and then

we need a another container that is collecting the metrics from the first container and storing them in a database. To

implement such system of containers that need to talk to each others we in general need to use a container orchestration

system such as Kubernetes. This is out of scope for this course, but we can use a feature

of Cloud Run called sidecar containers to achieve the same effect. A sidecar container is a container that is

running alongside the main container and can be used to do things such as collecting metrics.

❔ Exercises

-

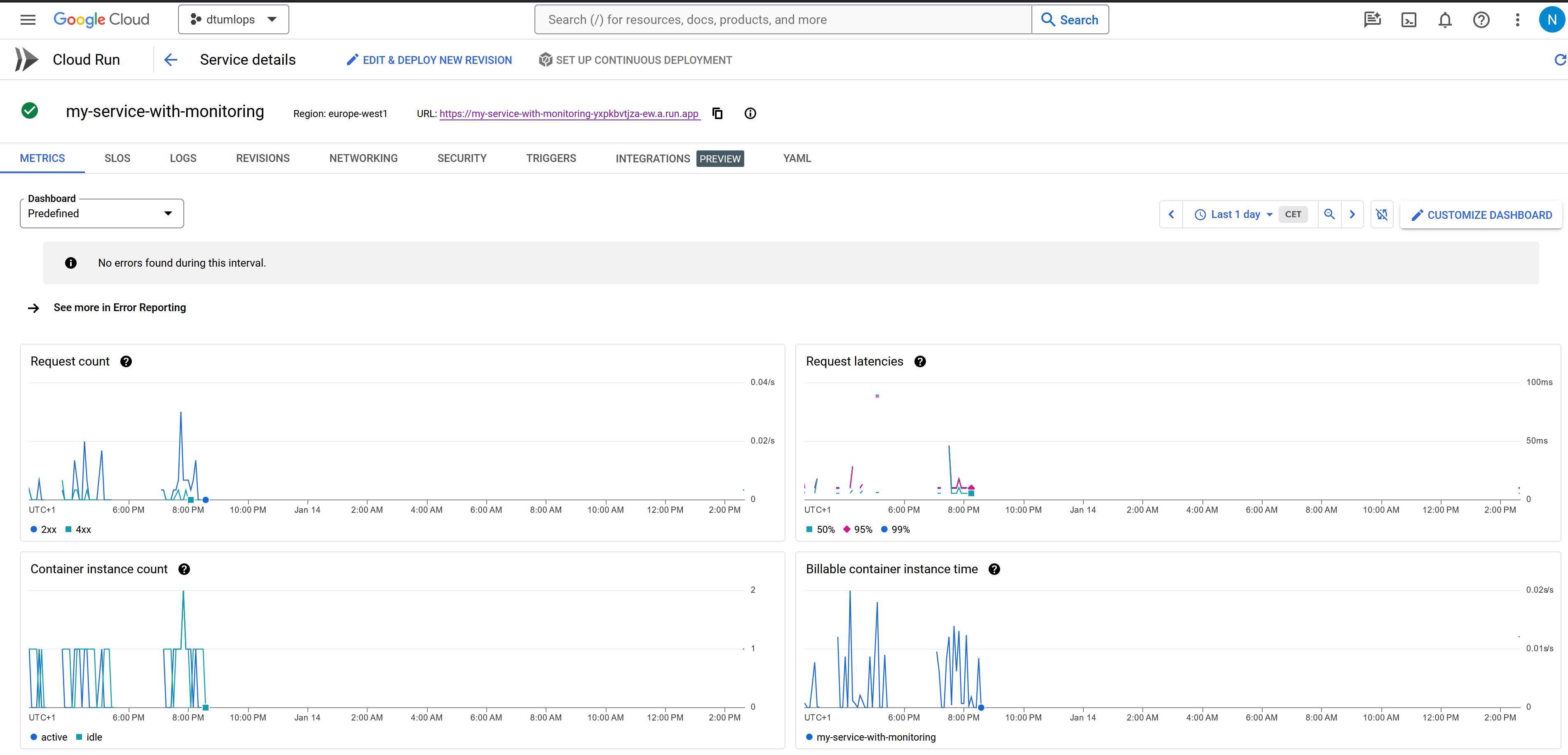

Overall we recommend that you just become familiar with the monitoring tab for your cloud run service (see image) above. Try to invoke your service a couple of times and see what happens to the metrics over time.

- (Optional) If you really want to load test your application we recommend checking out the tool locust. Locust is a Python based load testing tool that can be used to simulate many users accessing your application at the same time.

-

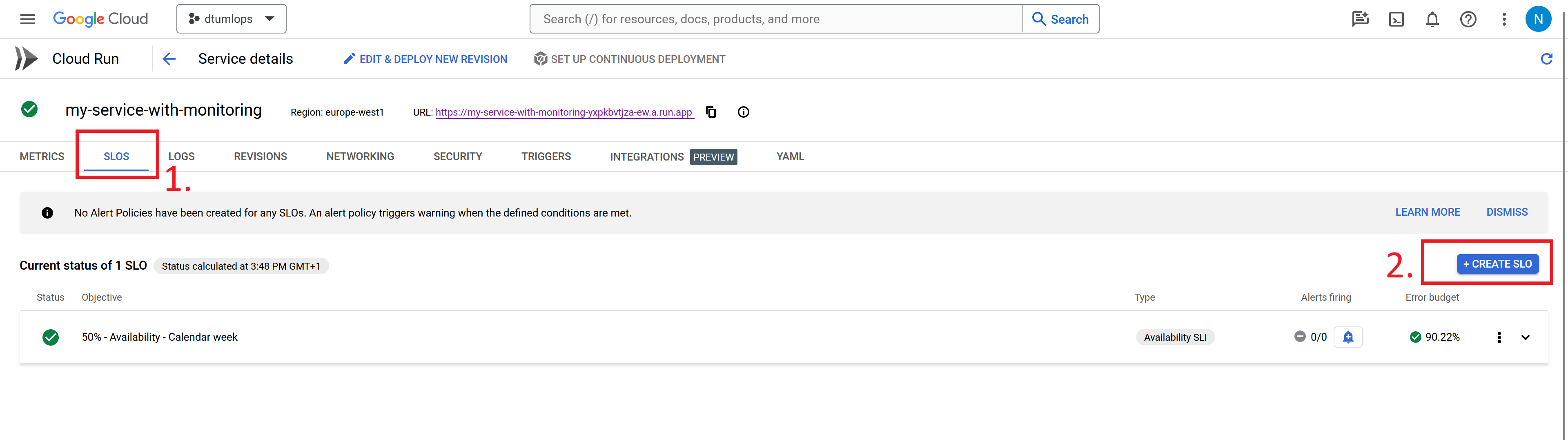

Try creating a service level objective (SLO). In short a SLO is a target for how well your application should be performing. Click the

Create SLObutton and fill it out with what you consider to be a good SLO for your application. -

(Optional) To expose our own metrics we need to setup a sidecar container. To do this follow the instructions here. We have setup a simple example that uses fastapi and prometheus that you can find here. After you have correctly setup the sidecar container you should be able to see the metrics in the monitoring tab.

Alert systems

A core problem within monitoring is alert systems. The alert system is in charge of sending out alerts to relevant people when some telemetry or metric we are tracking is not behaving as it should. Alert systems are a subjective choice of when and how many should be send out and in general should be proportional with how important to the of the metric/telemetry. We commonly run into what is referred to the goldielock problem where we want just the right amount of alerts however it is more often the case that we either have

- Too many alerts, such that they become irrelevant and the really important ones are overseen, often referred to as alert fatigue

- Or alternatively, we have too little alerts and problems that should have triggered an alert is not dealt with when they happen which can have unforeseen consequences.

Therefore, setting up proper alert systems can be as challenging as setting up the systems for actually the metrics we want to trigger alerts.

❔ Exercises

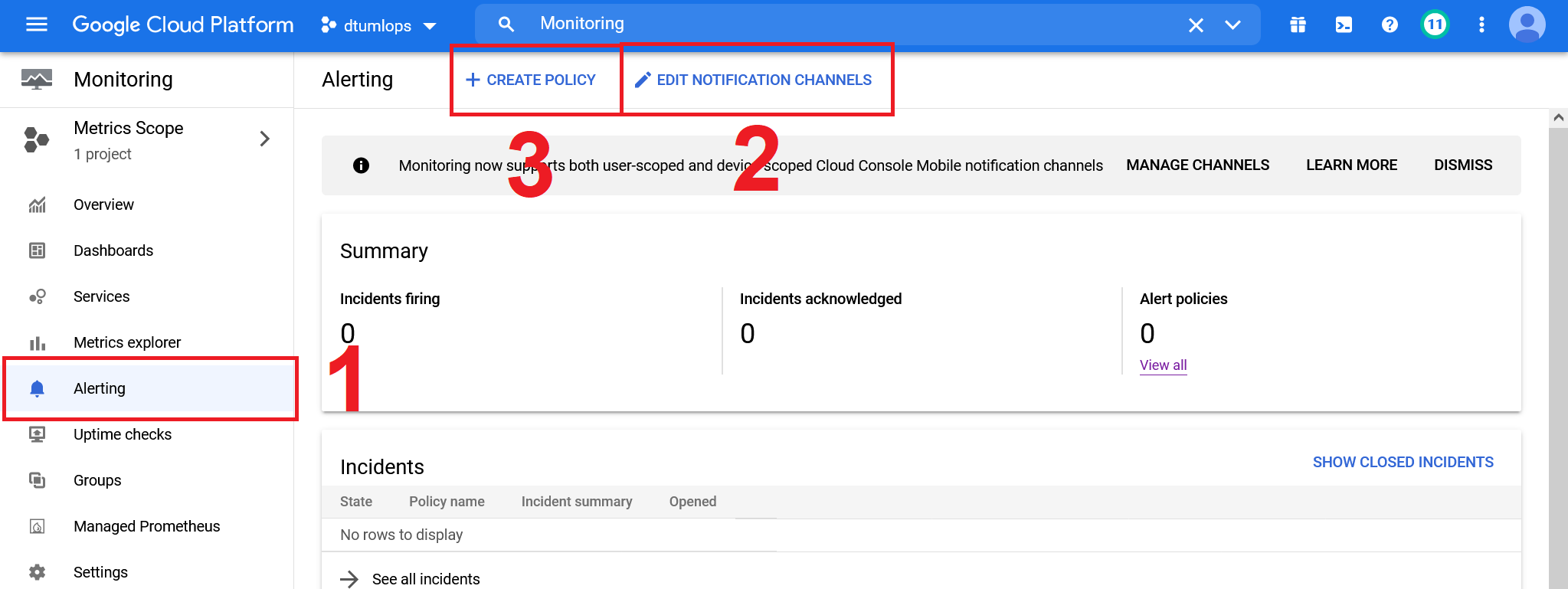

We are in this exercise going to look at how we can setup automatic alerting such that we get an message every time one of our applications are not behaving as expected.

-

Start by setting up an notification channel. A recommend setting up with an email.

-

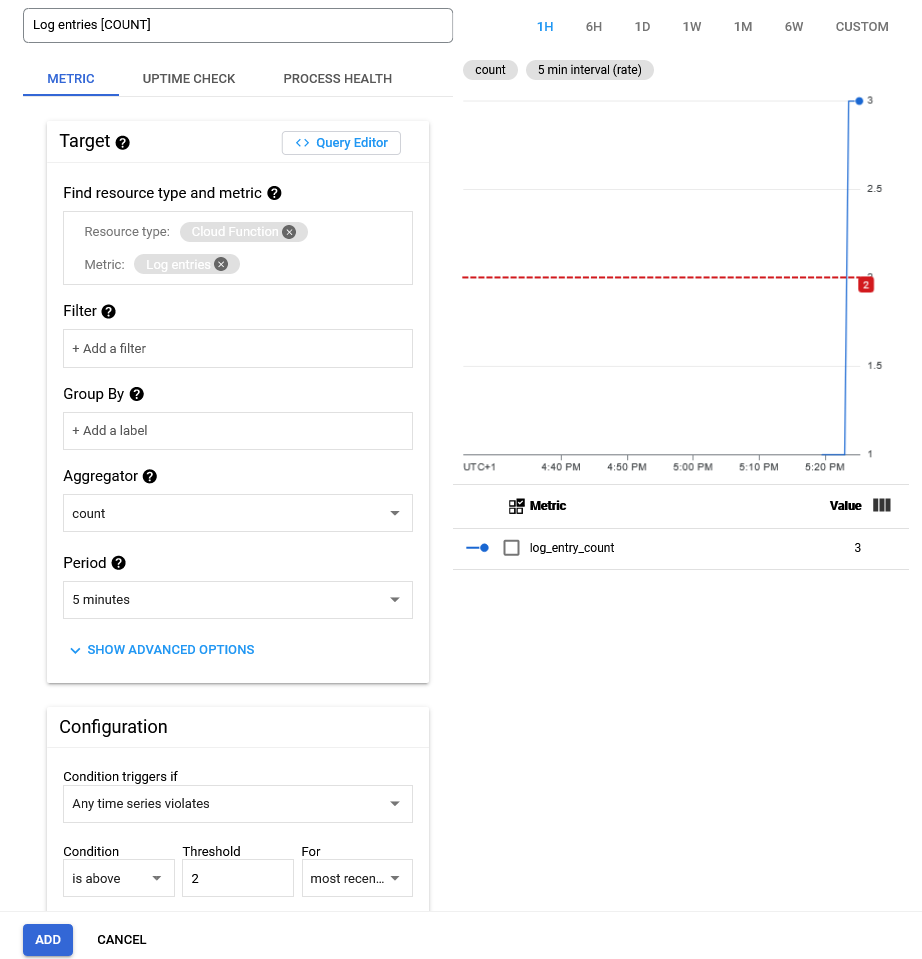

Next lets create a policy. Clicking the

Add Conditionshould bring up a window as below. You are free to setup the condition as you want but the image is one way bo setup an alert that will react to the number of times an cloud function is invoked (actually it measures the amount of log entries from cloud functions). -

After adding the condition, add the notification channel you created in one of the earlier steps. Remember to also add some documentation that should be send with the alert to better describe what the alert is actually doing.

-

When the alert is setup you need to trigger it. If you setup the condition as the image above you just need to invoke the cloud function many times. Here is a small code snippet that you can execute on your laptop to call a cloud function many time (you need to change the url and payload depending on your function):

-

Make sure that you get the alert through the notification channel you setup.